Engines of Adaptation

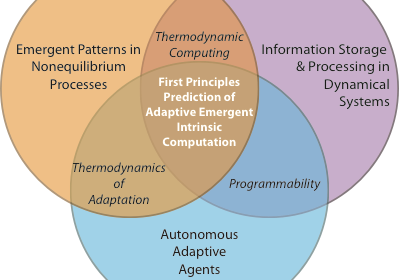

Remarkably robust and intelligent systems operate across the tremendous time and length scales of the natural world. At one extreme, nanoscale motor proteins shuttle nutrients along microtubule highways, adapting their loads to their host cell’s needs. At the other, an albatross on a 3,000-mile trek efficiently tracks and adaptively leverages wind fluctuations to radically reduce energy consumption as it circumnavigates an entire ocean without touching down. What is common in the behaviors spanning these immense scales is what one can call adaptive emergent intrinsic computation. Intrinsic computation here means information processing reliably and robustly supported by a physical substrate that is maintained away from equilibrium by internal and environmental energy and entropy fluxes. Emergent refers to how a system leverages a rich palette of patterns inherent in nonlinear systems to greatly simplify a task’s minimal required implementation and energy costs. After decades of active research, we now appreciate how such intricate behaviors arise from locally specified but general, distributed processing. Indeed, the albatross needs no exactly-detailed flight plan. Adaptive means that the system’s emergent information processing mechanisms respond to complex, unpredictable variations in the environment, while maintaining macroscopic functionality required for task execution, future learning, and survival. In this sense, these systems’ functioning relies on a kind of self-programming—spontaneously-generated extended patterns of control and self-monitoring.

We propose to tackle the challenge of discovering the fundamental principles of adaptive emergent intrinsic computation and to experimentally demonstrate how thermodynamic forces can drive a system to be naturally programmable. The topic is particularly timely given the scale and sophistication of the adaptive systems with which science and engineering now task themselves to predict and design. We take a particularly novel approach that harnesses the most recent advances in nonlinear physics, nanoscale thermodynamics, genetic engineering, and high- performance computing. Recent remarkable progress in these arenas and our team’s deep expertise with them suggests that our attack on the challenge of adaptive emergent intrinsic computation is likely to succeed, where the history of statistical physics, pattern formation, evolution, and inventive simulation is replete with partial insights, at best, and unproductive research cul-de-sacs, at worst.

This is a transdisciplinary 3-year project to experimentally demonstrate and theoretically predict emergent adaptive computation using two unique platforms, one natural and one engineered, coupled with large-scale simulations to quantitatively bridge between theory and experiment. The experimental platforms are carefully selected to focus on particular theoretical challenges while informing progress in the others. The effort leverages recent success in designing and predicting the fundamental performance limits of thermodynamically-embedded nanoscale information processing devices. Our team consists of three principal investigators (PIs) from a broad range of disciplines. On the theoretical side, we have James Crutchfield (PI, nonlinear dynamics, information and computation theories) and Erik Winfree (Co-PI, computation theory). And, on the experimental side, we have Michael Roukes (Co-PI, nanoscale and measurement physics, nonlinear dynamics) and Erik Winfree (bioengineering, molecular programming and, algorithmic self-assembly).

Welcome

Engines of Adaptation:

Self-Programming via

Emergent Thermodynamic Machines

NEWS

Army Awards $1.5M to Study Emergent Computation

Project Institutions

195 Physics

University of California at Davis

530-752-0600

California Institute of Technology

Directions: UCD and Caltech